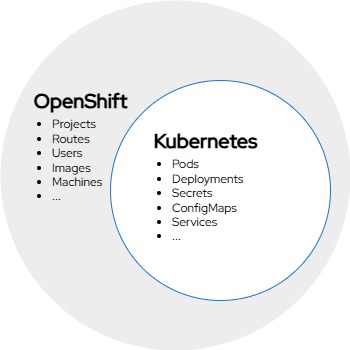

OpenShift vs Kubernetes

The relationship between OpenShift and Kubernetes parallels the relationship between YAML and JSON. YAML is considered a superset of JSON, meaning that it provides all of the functionality as well as some additional features. Kubernetes resources will generally operate within an OpenShift environment as is, or with some minor adjustments.

A Kubernetes engineer migrating to OpenShift will do so without much effort, but will not be making effective use of the entire platform.

Whereas an OpenShift engineer migrating to Kubernetes will have a higher barrier to migration, but will not be leaving functionality unused.

The following resources are some of the main resources OpenShift adds to Kubernetes. While they aren’t truly essential to operating the platform, a cursory understanding will reduce toil and confusion. (sorted by decreasing correlation)

Projects

Kubernetes and OpenShift are designed to allow for multiple tenants. Taking a cue from the Linux kernel, both platforms provide isolated "namespaces" for a tenant’s resources. Resources deployed in one namespace generally do not impact the behavior of resources deployed in another.

The differences between how OpenShift implements these isolated spaces with "Projects" differs from the Kubernetes implementation only slightly.

Run the following command to compare the "default" project to the "default" namespace:

# Compare the YAML definition of each resource

diff <(oc get ns test -o yaml) <(oc get project test -o yaml)# Create a file with the YAML definition of each resource and compare them

oc get namespace test -o yaml > %Temp%\test-namespace.yaml

oc get project test -o yaml > %Temp%\test-project.yaml

FC %Temp%\test-namespace.yaml %Temp%\test-project.yamlOpenShift has been engineered to make the difference in these resources virtually transparent. When a namespace is created, OpenShift will create a corresponding project and vice-versa.

The only perceivable difference between these resources lies within a `project’s ability to leverage OpenShift templates. OpenShift allows a privileged user to modify what is created when a user attempts to create a new project. link

Run the following to commands to observe the difference:

# Create a new project template and install it.

oc adm create-bootstrap-project-template -o yaml | oc apply -n openshift-config -f -

oc patch project.config.openshift.io/cluster --type=json -p '[{"op":"add","path":"/spec/projectRequestTemplate","value":{"name":"project-request"}}]'

# Confirm that a new project has an additional rolebinding (admin) while the new namespace does not.

oc new-project example-project && oc create ns example-ns

diff <(oc get rolebindings -n example-project -o name) <(oc get rolebindings -n example-ns -o name)# Create a new project template and install it.

oc adm create-bootstrap-project-template -o yaml | oc apply -n openshift-config -f -

oc patch project.config.openshift.io/cluster --type=json -p '[{"op":"add","path":"/spec/projectRequestTemplate","value":{"name":"project-request"}}]'

# Confirm that a new project has an additional rolebinding (admin) while the new namespace does not.

oc new-project example-project && oc create ns example-ns

oc get rolebindings -n example-project -o name > %Temp%\rolebindings-project.yaml

oc get rolebindings -n example-ns -o name > %Temp%\rolebindings-namespace.yaml

FC %Temp%\rolebindings-project.yaml %Temp%\rolebindings-namespace.yaml|

While it’s true that namespaces isolate resources, they do not necessarily isolate the compute/memory/storage/network that underpins the platform. It’s quite possible to degrade the performance of another tenant’s resources by deploying resources in a separate namespace. |

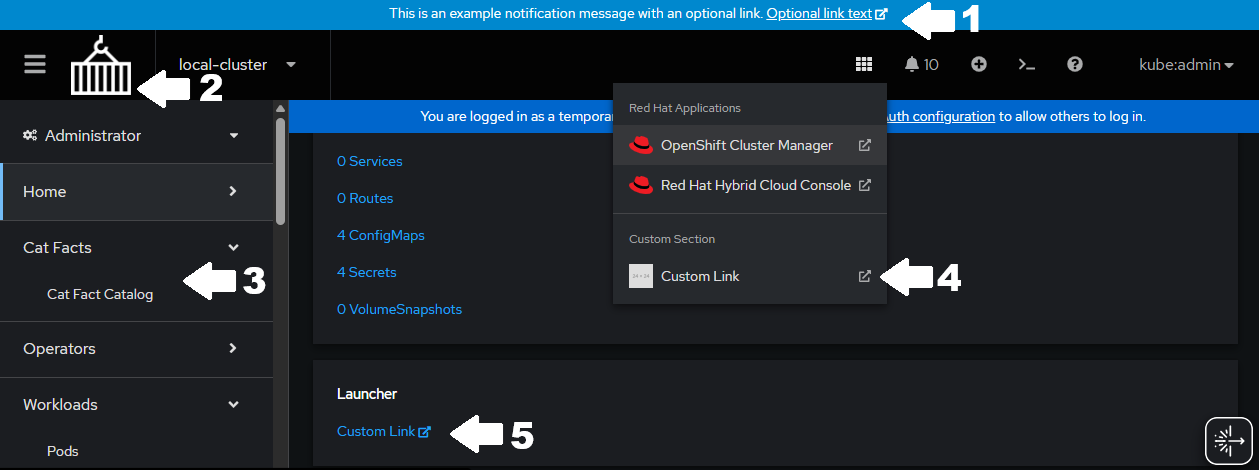

Web Console

Like projects, the additional Web Console that OpenShift provides does not directly impact the behavior of workloads or control components in any way. Unlike projects however, the difference between what OpenShift and Kubernetes provide is quite perceivable! Kubernetes by default does not include a graphical user interface, but OpenShift does. There are several open source options available for Kubernetes such as dashboard, lens, headlamp, devtron, but onboarding any one of them can be something of a burden when it comes to integration, upgrades, and compliance.

-

custom branding

-

additional views

-

quickstarts

-

terminal environments

-

even an AI assistant!

-

Custom Banners -

Custom Logos -

Custom Sections -

Custom Applications -

Custom Namespace Launchers

|

State tuned! |

Routes

Routes are a specific implementation for the generic Kubernetes concern of external application exposure. Because applications hosted in Kubernetes often require a mechanism to serve them on extended/public/global networks, Kubernetes provides a "pluggable" system for this with resources like ingress and gateways.

Historically routes provided a simple and stable implementation before the ingress API became generally available. Today, they are the "batteries include" solution, but do not preclude the use of other ingress options.

|

OpenShift will create Route objects for Ingresses that do not specify an

|

Open Virtual Network Container Network Interface (OVN)

|

The topic of Container Network Interfaces is extensive, and what follows is not a comprehensive description. For more in depth coverage of the CNI domain:

|

There are many CNI’s available for Kubernetes consumption. Antrea,Calico,Cilium,NSX-T,OVN are just a few that all implement the same specification, but in wildly different ways (eBPF, BGP, RDMA, Hardware Offloading…). The standard for OpenShift since version 4.12 is OVN. There are many specific features that OVN provides, but for the purposes of this workshop, only it’s relationship with kube-proxy will be discussed.

OVN Kubernetes DOES NOT leverage kube-proxy!

In light of this, troubleshooting network connectivity should follow the procedures outlined here in. Standard iptables commands are effectively replaced with ovn-nbctl and ovn-sbctl from within the ovnkube-node workload.

You can confirm this by running:

# View the running configuration that indicates KubeProxy status

oc get network.operator.openshift.io cluster -o yaml | grep deployKubeProxy# View the running configuration that indicates KubeProxy status

oc get network.operator.openshift.io cluster -o yaml | FINDSTR deployKubeProxySecurity

OpenShift takes security very seriously. The entire platform, from hardware to runtime, leverages comprehensive security tooling and practices such as encryption, selinux, seccomp, image signatures, system immutability, etc. Kubernetes can be made secure without additional tooling, but OpenShift enforces rather strict configurations by default.

-

-

Prevent elevated privileges for resources created by specific accounts

-

Enforced at admission on the pod level

-

-

-

Prevent elevated privileges broadly at the namespace level

-

Enforced at admission on the workload level

-

The Pod Security Standards are defined here

-

The correct approach to resolving issues of either type is to reconfigure the failing workload in order to comply with the default policy. There are several circumstances that might prevent this approach however. When the workload can not be configured in a compliant way:

-

An appropriate scc must be added to the account associated with the running workload

oc adm policy add-scc-to-user "SCC" -z "SERVICE_ACCOUNT"-

Or the level of enforcement at the namespace level must be reduced

apiVersion: v1

kind: Namespace

metadata:

annotations:

openshift.io/sa.scc.mcs: s0:c31,c10

openshift.io/sa.scc.supplemental-groups: 1000950000/10000

openshift.io/sa.scc.uid-range: 1000950000/10000

labels:

kubernetes.io/metadata.name: test

openshift-pipelines.tekton.dev/namespace-reconcile-version: 1.18.0

pod-security.kubernetes.io/audit: restricted

pod-security.kubernetes.io/audit-version: latest

pod-security.kubernetes.io/warn: restricted

pod-security.kubernetes.io/warn-version: latest

# Add and configure the two lines below #

pod-security.kubernetes.io/enforce: restricted

pod-security.kubernetes.io/enforce-version: latest

name: test

...

spec: {}|

In the previous namespace sample the three annotations are a security configuration associated with SCC’s as well. |

Knowledge Check

What project names are reserved for system use only?

Answer

openshift-* and kube-\* are reserved project names.

oc new-project kube-example #Fails

oc create ns kube-example #SucceedsWhat opensource tool is used to provide route support?

Answer

oc exec -n openshift-ingress deploy/router-default -- /bin/sh -c "ps -ef | grep haproxy"A workload needs to run with a User and Group ID within a range, how would you accomplish this without hardcoding the value in the container?

Answer

You could create a new project template as was shown above, but add an annotation that specifies the proper range:

openshift.io/sa.scc.uid-range: XXXXX/10000